I have a home file and compute server that provides several TB of storage and supports a bunch of virtual machines (for setup see here: https://chezstephens.org.uk/server-upgrade/). The server uses a zfs 3-copy mirror and automated snapshots to provide what should be reliable storage.

However, reliable as the system might be, is is vulnerable to admin user error, theft, fire or a plane landing on the garage where it is kept. I wanted to create a backup in a different physical location that could step in in the case of disaster.

I serve a couple of community websites, so I’d like recovery from disaster to be assured and relatively painless.

I recently was given a surplus 4-core 2.2 GHz 3 GB HP desktop by a friend. I replaced her hard disk with a 100GB SSD for the system and a 4TB hard disk (Seagate Barracuda) for backup storage (which I already had). I upgraded the memory by replacing 2x512MB with 2x2GB for a few pounds. That’s the hardware complete.

Installing the software

The OS installed was Ubuntu 18.04 LTS. The choice was made because this has long term support (hence the LTS), supports zfs, and is the same OS as my main server. My other choice would have been Debian.

OS install

I wanted to run the OS from a small zfs pool on the SSD. To get the OS installed booted from zfs is relatively straightforward, but not directly supported by the install process. To set this up: install the OS on a small partition (say 10GB), leaving most of the SSD unused. Then install the zfs utilities, create a partition and pool (“os”) on the rest of the SSD, copy the OS to a dataset on “os”, create the grub configuration and install the boot loader. The details for a comprehensive root install are here: https://github.com/zfsonlinux/zfs/wiki/Ubuntu-18.04-Root-on-ZFS. I used a subset of these instructions to performs the steps shown above.

VNC install

I set up VNC to remotely manage this system. You can follow the instructions here: https://www.digitalocean.com/community/tutorials/how-to-install-and-configure-vnc-on-ubuntu-18-04. I never did get vncserver running from the user’s command-line to work. But it works fine as that user started as a system service, with the exception that I don’t have a .Xresources file in my home directory. This lack doesn’t appear to have any effect, apart from some icons are missing from the start menu. As this doesn’t bother me, I didn’t spend any time trying to fix it.

So the system boots up from zfs, and after a pause I can connect to it from VNC.

QEMU install

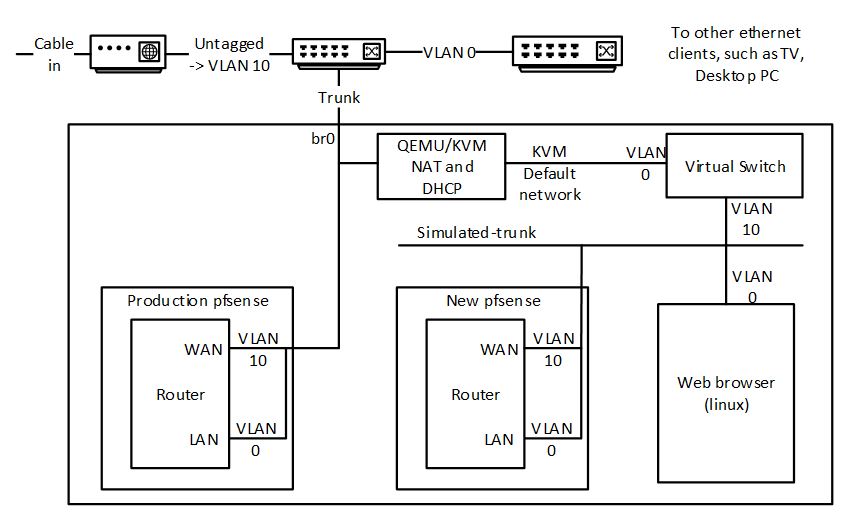

I followed the instructions here: https://www.linuxtechi.com/install-configure-kvm-ubuntu-18-04-server/, except I didn’t follow the instructions for creation of the bridge.

For the networking side, I followed the instructions here: https://linuxconfig.org/install-and-set-up-kvm-on-ubuntu-18-04-bionic-beaver-linux.

I installed virt-manager and libvirt-daemon-driver-storage-zfs. I could then create virtual machines with zvol device storage for virtual disks.

Backup Process

The backup server was configured to pull a backup from the production server once a day, and to sleep when not doing this. I suppose the reason to sleep is to save electricity. A Watt of power amounts to about £1.40 a year. So this saves me about £30 pounds a year.

The backup process runs from a crontab entry at 2:00am. The process ends by setting a suspend until 1:50 the next morning using this code:

!/bin/sh

target=date +%s -d 'tomorrow 01:50'

echo “sleeping”

/usr/sbin/rtcwake -m mem -t $target

echo “woken”

A pool (“n2”) on the backup server (“nas3”) was created in the 4TB disk to hold the backups. Each dataset on the production server (“shed9” **) was copied to the backup server using zfs send and recv commands. Because I had a couple of TB of data, this was done by temporarily attaching the 4TB disk to the production server.

The zrep program (http://www.bolthole.com/solaris/zrep/) provides a means of managing incremental zfs backups.

The initial setup of the dataset was equivalent to:

from=shed9

to=nas3

frompool=p5

topool=n2

ssh=”ssh root@shed9″

# Delete any old zrep snapshots on source

$ssh "zfs list -H -t snapshot -o name -r $frompool/$1 | grep zrep | xargs -n 1 zfs destroy"# Use the following 3 lines if actually copying

zfs destroy -r $topool/$1

$ssh "zfs snapshot $frompool/$1@zrep_000001"

$ssh "zfs send $frompool/$1@zrep_000001" | zfs recv $topool/$1

# Set up the zrep book-keeping

$ssh "zrep changeconfig -f $frompool/$1 $to $topool/$1" zrep changeconfig -f -d $topool/$1 $from $frompool/$1

$ssh "zrep sentsync $frompool/$1@zrep_000001"

And the periodic backup script looks like this:

# Find last local zrep snapshot

lastZrep=`zfs list -H -t snapshot -o name -r $pool/$1 | grep zrep | tail -1`

# Undo any local changes since that last snapshot

zfs rollback -r $lastZrep# Do the actual incremental backup

zrep refresh $pool/$1

The normal (i.e., what it was clearly designed to support) use of zrep involves a push from production to the backup server. My use of it requires a “pull” because the production server doesn’t know when the backup server is awake. This reversal of roles complicates the scripts resulting in those above, but it is all documents on the zrep website. Another side effect is that the resulting datasets remain read-write on the backup server. Any changes made locally are discarded by the rollback command each time the backup is performed.

Creating the backup virtual machines

Using virt-manager, I created virtual machines to match the essential virtual machines from my production server. The number of CPUs and memory space were squeezed to fit in the reduced resources.

Each virtual machine is created with the same MAC address as on the production server. This means that both copies cannot be run at the same time, but it also creates least disruption switching from one to the other as ARP caches do not need to be refreshed.

I also duplicated the NFS and Samba exports to match the production machine.

I tested the virtual machines one at a time by pausing the production VM and booting up the backup VM. Note that booting a backup copy of a device dataset is safe in the sense that any changes made during testing are rolled back before doing the incremental backup. It also means you can be cavalier with pulling the virtual plug on the running backup VM.

How would I do a failover?

I will assume the production server is dead, has kicked the bucket, has shuffled off this mortal coil, and is indeed an ex-server.

I would start by removing the backup & suspend crontab entry. I don’t think a rollback would work while a dataset is open by a virtual machine, but I don’t want to risk it.

I would bring up my pfsense virtual machine on the backup. Using the pfsense UI, I would update the “files” DNS server entry to point to the IP address of the backup. This is the only dependency that the other VMs have on the broken server. Then I would bring up the essential virtual machines. That should be it.

Given that the most likely cause of the failover is admin error (rm -rf in the wrong place), recovery from failover would be a hard-to-predict partial software rebuild of the production server. If a plane really did land on my garage, recovery from failover may take a little longer depending on how much of the server hardware is functional when I pull it from the wreckage. And on that happy note, it’s time to finish.

**

For what it’s worth, this is the 9th generation of file server. They started off running the shed before moving to the garage at about shed7. But the name stuck.